Object Detection with YOLO: A Gentle Introduction

Image Credit: Shubham Gupta

Introduction

Computer vision is an interdisciplinary field of study that focuses on enabling machines to interpret and understand visual data such as images and videos. The goal of computer vision is to develop algorithms and techniques that allow computers to extract meaningful information from visual data, similar to the way humans perceive and understand the world around them.

Some of the key tasks in computer vision include:

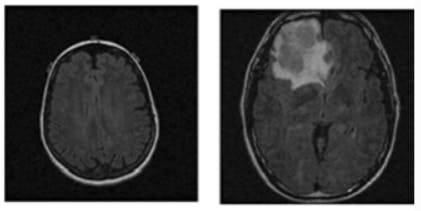

- Image classification involves categorizing an image into one or more predefined classes or categories. For example, image classification can be used to identify if a Magnetic Resonance Imaging (MRI) scan of the brain has a tumor or not.

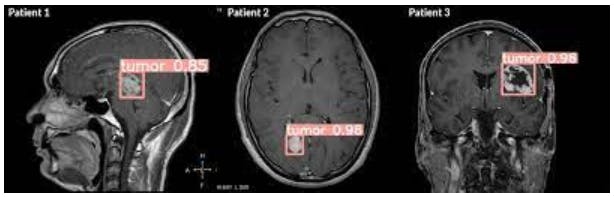

Object detection: involves identifying and locating objects within an image or video. For example, object detection can be used to locate and identify brain tumors within Magnetic Resonance Imaging (MRI) scans using bounding boxes.

Segmentation: involves dividing an image into multiple segments or regions, each of which represents a different object or part of the image. For example, image segmentation can be used to identify and group pixels in an MRI scan as a region with a tumor and a region without a tumor.

Having established a foundation on the basics of computer vision, let's now dive into the specific subject of object detection.

Application of Object Detection

Object detection has a wide range of applications in various fields, including but not limited to:

Surveillance: It is used to monitor public spaces, identify potential threats, and detect suspicious behavior.

Autonomous vehicles: It is used to recognize and avoid obstacles, pedestrians, and other vehicles.

Robotics: It is used to identify and manipulate objects, and navigate in dynamic environments.

Healthcare: It is used in medical imaging for diagnosis and treatment planning.

Object Detection Techniques

There are several techniques for object detection in computer vision. Some of the most popular techniques are:

Sliding Windows

A sliding window involves scanning an image with a fixed-size window at different positions and scales to detect objects of interest. The sliding window method works by dividing the input image into multiple overlapping sub-images, each of which is analyzed using a classifier to determine whether it contains the object of interest. One of the main limitations of the sliding window method is its computational complexity.

Region-based Convolutional Neural Networks (R-CNNs)

Unlike the sliding windows approach, R-CNNs are end-to-end trainable and can learn to detect objects directly from raw pixel inputs. R-CNNs work by first generating a set of region proposals using a selective search algorithm, which identifies regions in the image that are likely to contain objects. These region proposals are then passed through a convolutional neural network (CNN) to extract a fixed-length feature vector for each region. Finally, a classifier is applied to each feature vector to determine the object class and location.

The R-CNN approach was further improved with the introduction of Fast R-CNN and Faster R-CNN. R-CNNs and their variants have achieved state-of-the-art performance in object detection tasks and have been widely adopted in various applications. However, these models can be computationally expensive and require large amounts of labeled data for training.

You Only Look Once (YOLO)

YOLO (You Only Look Once) is a state-of-the-art object detection algorithm that uses a single neural network to detect objects in an image. YOLO works by dividing the input image into a grid of cells and predicting a bounding box and class probability for each cell.

Unlike traditional object detection algorithms that require multiple passes over an image to detect objects, YOLO takes a more holistic approach and predicts object bounding boxes and class probabilities in a single feed-forward pass of the neural network. This makes YOLO extremely fast and suitable for real-time object detection applications.

To predict object bounding boxes and class probabilities, YOLO first applies a series of convolutional layers to the input image to extract feature maps. The feature maps are then used to predict bounding boxes and class probabilities for each cell in the grid using a set of convolutional and fully connected layers.

One of the advantages of YOLO is that it can detect multiple objects in an image simultaneously. In addition, YOLO is highly accurate and can detect objects of various sizes and aspect ratios. However, YOLO may struggle with detecting small objects or objects that are heavily occluded.

The focus of the subsequent articles will be on the implementation of the different versions of YOLO models from version YOLOv4 to the latest version, YOLOv8.

Object Detection Terminologies

Bounding Box

Bounding boxes are like little frames that we use to put objects of interest in the spotlight. Imagine you're watching a movie and there's a particular scene where a character is holding a briefcase that's important to the plot. If you were asked to describe where the briefcase is in the scene, you'd likely use your fingers to frame the area around the briefcase. This is essentially what a bounding box does in object detection: it creates a virtual frame around an object of interest so that it can be detected and classified by an algorithm.

Bounding boxes come in all shapes and sizes, depending on the object being detected. For instance, a bounding box for a car might be much larger and wider than a bounding box for a pedestrian. To make sure the object is properly detected, the bounding box needs to be accurately placed around the object. This is crucial for the overall accuracy of the object detection algorithm. An imprecise or inaccurate bounding box can lead to misclassification or false positives.

Bounding Box Format

There are different formats for representing the coordinate points of the bounding boxes. The three most commonly used formats are:

YOLO

COCO

Pascal VOC

The yolo format is introduced in the YOLOv1 Paper and then it has been used continuously. It defines four values (The width and height of the bounding box and the center coordinates)

Center Coordinates: These are the x and y coordinates of the center point of the bounding box, relative to the image dimensions. The coordinates are usually normalized to the range of [0, 1], where (0, 0) represents the top-left corner of the image, and (1, 1) represents the bottom-right corner.

Width and Height: These values represent the width and height of the bounding box, also relative to the image dimensions. Like the center coordinates, these values are normalized to the range of [0, 1].

Intersection Over Union (IOU)

Intersection over Union (IoU) is like a matchmaker for object detection algorithms - it measures how well the algorithm is "matching" the predicted bounding box with the actual object in the image.

Image Credit: Kukil

Think of it like a Venn diagram - the intersection between the predicted bounding box and the ground truth bounding box represents the part where the algorithm got it right. The IoU score tells us how much of the object is correctly localized within the bounding box, and how much is outside of it.

Non-Maximum Suppression (NMS)

Imagine you're a detective trying to solve a crime. You have multiple eyewitness accounts, each pointing to a different suspect. How do you know which one is the real culprit?

This is a similar problem faced by object detection algorithms. When analyzing an image, the algorithm may identify multiple bounding boxes that could potentially contain the same object. But which one is the most accurate?

This is where non-maximum suppression (NMS) comes in. NMS is like a detective who sorts through the eyewitness accounts and eliminates the false leads to identify the true criminal. NMS is a post-processing technique that removes redundant bounding boxes and keeps only the most accurate ones. By using a threshold value for the IoU score between bounding boxes, NMS discards those that overlap too much with each other and only keeps the one with the highest confidence score.

Mean average precision (mAP)

Mean average precision (mAP) is like a report card for object detection algorithms - it tells us how well the algorithm is performing across different categories and levels of difficulty.

Think of it like a teacher grading a student's performance on multiple assignments - mAP measures the algorithm's accuracy on different types of objects and backgrounds, and averages them to provide an overall score. This helps us understand the algorithm's strengths and weaknesses and identify areas for improvement.

The mAP score is calculated by comparing the predicted bounding boxes to the ground truth boxes and computing the average precision (AP) for each class and difficulty level. AP measures how well the algorithm is localizing objects and assigns a score between 0 and 1. The mAP score is the average AP score across all classes and difficulty levels.

Conclusion

I hope that this article has given you a better understanding of what object detection is and how it works. Be sure to check out the upcoming article on implementing YOLOv4 for object detection.

Thank you for reading, and stay tuned for more exciting content!